Nvidia, AMD, and Intel have each unveiled their latest AI chip advancements in Taiwan, intensifying the competitive race in the sector.

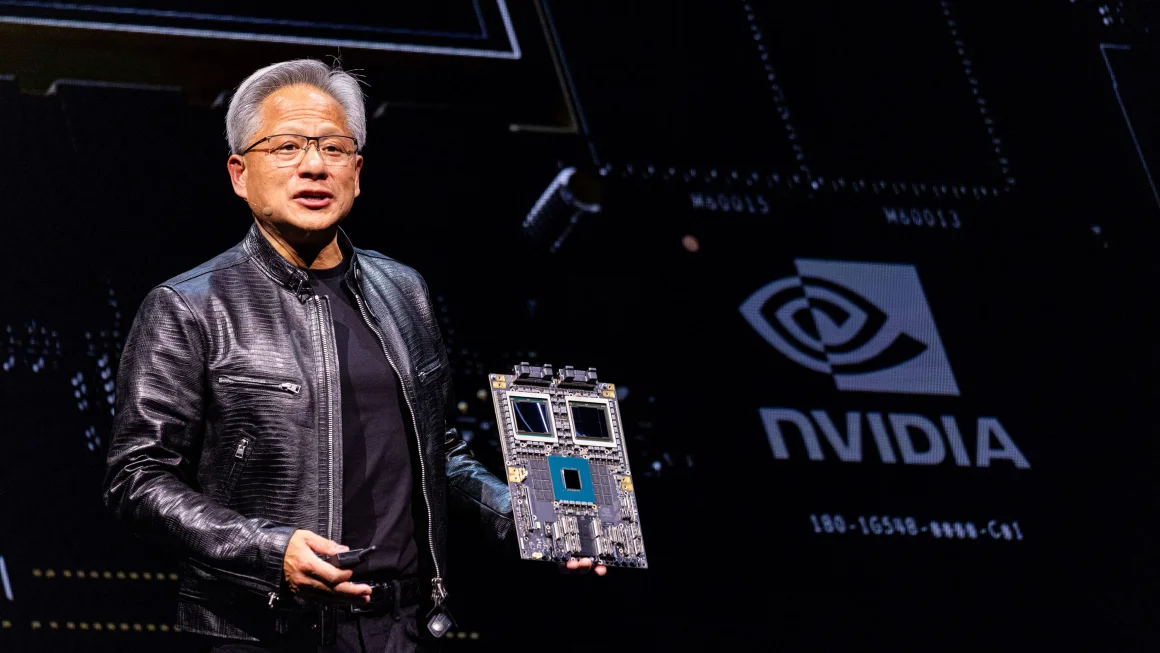

Jensen Huang, CEO of Nvidia, announced on Sunday that the company will introduce its most advanced AI chip platform, named Rubin, in 2026. This platform will replace the Blackwell, launched in March and touted as the “world’s most powerful chip” for data centers. Rubin will feature new graphics processing units (GPUs), a central processing unit (CPU) named Vera, and advanced networking chips. Huang, speaking at National Taiwan University in Taipei before the Computex tech trade show, emphasized, “Today, we’re at the cusp of a major shift in computing. The intersection of AI and accelerated computing is set to redefine the future.” He also outlined a plan for new semiconductor releases on a “one-year rhythm.”

Nvidia, a market leader whose shares have surged over the past year, holds approximately 70% of AI semiconductor sales. Richard Windsor, founder of Radio Free Mobile, noted, “Nvidia clearly intends to keep its dominance for as long as possible and in the current generation, there is nothing really on the horizon to challenge that.”

Despite Nvidia’s dominance, competition is mounting. On Monday, AMD CEO Lisa Su revealed the company’s latest AI processors in Taipei and detailed a roadmap for new products over the next two years. The next-generation MI325X accelerator will be available in the fourth quarter of this year. Su highlighted, “AI is our number one priority, and we’re at the beginning of an incredibly exciting time for the industry.”

On Tuesday, Intel CEO Patrick Gelsinger introduced the sixth generation of Xeon chips for data centers and the Gaudi 3 AI accelerator chips, positioning the latter as a cost-effective alternative to Nvidia’s H100, being one-third cheaper.

The global race to develop generative AI applications has spiked demand for advanced chips essential for data centers supporting these technologies. Both Nvidia and AMD, led by Taiwan-born American CEOs from the same family, were initially renowned in the gaming world for their GPUs. These GPUs, now crucial for powering generative AI technologies like ChatGPT, have expanded their utility beyond gaming.

Su further announced the MI300X, launched last year, which offers superior inference performance, memory size, and compute capabilities. AMD’s roadmap now promises annual updates, with new product families scheduled each year: MI350 in 2025 and MI400 in 2026. This approach aims to sustain AMD’s competitive edge and innovation pace in the evolving AI landscape.

Leave a Reply