Some of the world’s largest companies have pinned their futures—and ours—on the widespread adoption of AI, a technology so complex and potentially dangerous that even its creators are urging a slowdown.

This situation seems like one that US lawmakers would want to regulate heavily, similar to the federal oversight of narcotics, cigarettes, or even TikTok. However, Congress has yet to pass any legislation on AI, and a bipartisan “roadmap” released last month faces an uncertain future, especially in an election year. Ironically, one of the roadmap’s priorities is to prevent AI from disrupting the American electoral process.

Consequently, the understaffed and underfunded Federal Trade Commission (FTC) and the Justice Department are left to try and keep Big Tech in check through enforcement.

According to my colleague Brian Fung, antitrust officials at the FTC and the Justice Department are close to finalizing an agreement on how to jointly oversee AI giants like Microsoft, Google, Nvidia, OpenAI, and others. This suggests that a broad crackdown is imminent, but it may not be swift enough. The AI boom is already in full swing.

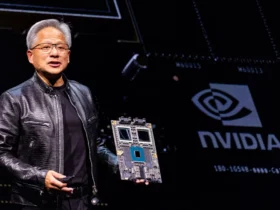

Nvidia, a chipmaking company that was relatively unknown a year ago, recently joined the $3 trillion club, briefly surpassing Apple as the second most valuable publicly traded company in the US. Microsoft remains the most valuable company by market cap, thanks to its investments in OpenAI, the maker of ChatGPT.

These financial gains were possible because tech-challenged lawmakers in Washington have been largely inactive. In contrast, European officials formally adopted the world’s first standalone AI law this spring, five years after the rules were proposed.

The significance of the financial aspect cannot be overstated. AI was primarily an academic topic until OpenAI released ChatGPT, sparking a gold rush and becoming Wall Street’s hottest trend.

This is precisely what a group of current and former OpenAI employees are now warning against. In an open letter this week, they wrote, “AI companies have strong financial incentives to avoid effective oversight. So long as there is no effective government oversight of these corporations, current and former employees are among the few people who can hold them accountable to the public. Yet broad confidentiality agreements block us from voicing our concerns, except to the very companies that may be failing to address these issues.”

In essence, we are relying on newly wealthy tech insiders to self-regulate. What could possibly go wrong?

Leave a Reply